Robot Recycling Mechatronics Project

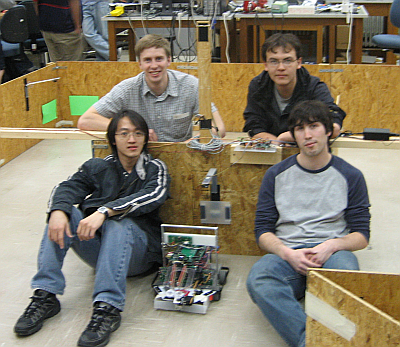

Aaron Becker, Sal Candido, Jenn Honn Khong, Dan McKenna

Objective

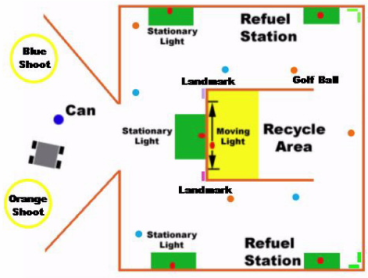

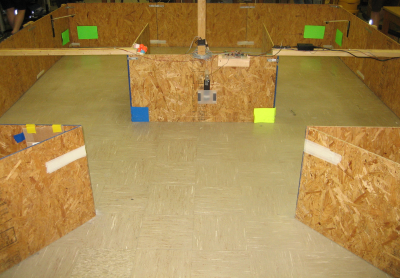

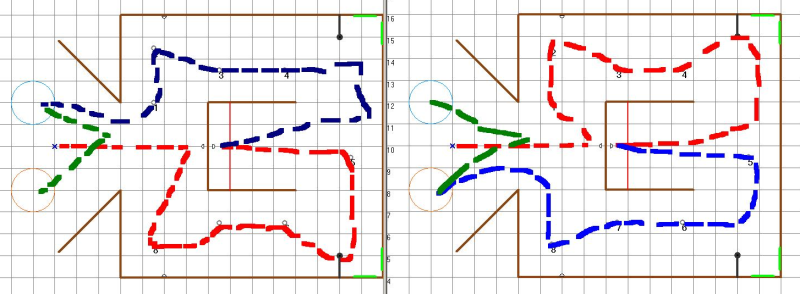

The contest has two objectives. The first is to pick up a soda can and deposit it in the Recycle Area of the course while moving through a sequence of waypoints determined randomly by the course. The second objective is to collect golf balls (trash) and dispose of them into the appropriate garbage shoot (this can be done on the way to the Recycle Area or after). The schematic and a picture of the course are shown below:

Some tools required to complete the course include:

- left and right wall following

- finding, gripping, and carrying a soda can

- object recognition using image processing

- collection, sorting, and drop-off of orange and blue golf balls

The goal is to complete the objectives accurately and quickly.

Media

|

|

|

| Contest Winning Run | Alternate Direction Run | Music Video |

Components

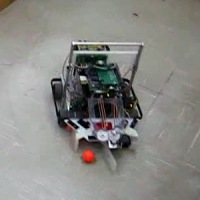

The robot is based on a common robotics platform used in the class developed by Dan Block of the UIUC Control Systems Laboratory. A can gripper and a golf ball collecting mechanism were developed by the team. Color camera, range sensors, bearing sensors, acceleration sensors, odometry, and RC Servos were used in this project.

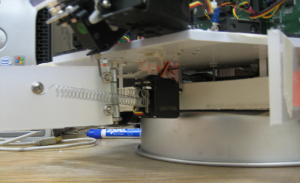

Can Gripper

The can gripper is based on the idea that simple is better. It is strong yet light. The gripper uses friction between the surface of rubber bands and the can for grabbing and lifting purposes. Two RC Servos are used to control the can gripper: one for controlling lifting angles and the other for opening and closing the gripper.

The basic premise of our gripper design was to lift the can out of the way of the golf ball collector while not obscuring the vision system. We also designed the gripper to be robust to sensing errors of the can's position.

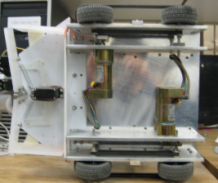

Golf Ball Collecting Mechanism

The goal of the golf ball collecting mechanism is to collect both orange and blue golf balls simultaneously. We were limited in our design by only being allowed one RC servo for this mechanism. To accomplish this, the mechanism has two spring-loaded gates. The left compartment holds orange golf balls while the right stores blue golf balls. A "U"-shaped linkage connected to the RC Servo to opens one gate at a time. The spring mechanism closes the gate if it is not forced open by the servo.

During the design process, we iterated through a multitude of mechanisms that achieved varying levels of success.

Some prototypes that were ultimately not selected for our final design were:

During the design process, we iterated through a multitude of mechanisms that achieved varying levels of success.

Some prototypes that were ultimately not selected for our final design were:

- the hammer

- whiskers

- a passive collector

- several wheel-based designs

- a rubber-band based golf ball collection mechanism

Sensors

We used three infrared sensors to measure distance to obstacles. A rate gyro was used to measure the rotational acceleration of the robot. This, in conjunction with wheel encoders and a compass compose the whole of our navigation system.

Camera and Vision

The color camera and a simple vision processing algorithm were used to find a variety of objects including a red soda can, orange golf balls, blue golf balls, green landmarks, and bright lights. We used the centroid calculations of our vision algorithm as feedback to our control algorithm.

We use a queue-based thresholding and segmentation algorithm to find objects of interest in our view and compute their position relative to the robot. The code is notable in that it can simultaneously handle multiple objects of different colors in each image frame. The algorithm runs in approximately 19.6 ms. This is slightly faster than the standard vision package provided to the class and has the benefit of searching for multiple objects in each frame. However, it requires an additional allocation of approximately one more image (stored as char elements) than the standard package. Since our algorithm examines each pixel only one time, we could avoid building an HSV image array which would make our memory requirements comparable to the standard algorithm.

The segmentation algorithm depends on values for HSV/RGB thresholds for each object. Determining reliable values for these thresholds can be difficult, so we wrote a Matlab program to interactively select valid pixels and compute threshold ranges, both in RGB and HSV.

Strategy

Our robot uses an event-based planner that uses a number of landmarks and triggers with (mostly) closed-loop control between events. A high level state machine determines the current objective, and selects from a low level state machine the appropriate behavior-based controller to complete each objective. To achieve the goal of a fast time, we designed the robot to traverse the course once, with minimal back tracking.

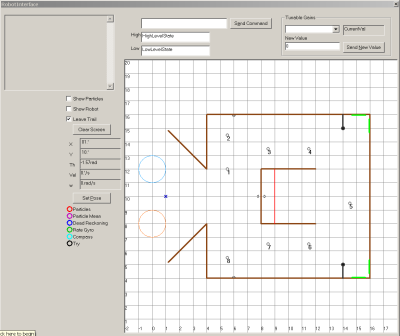

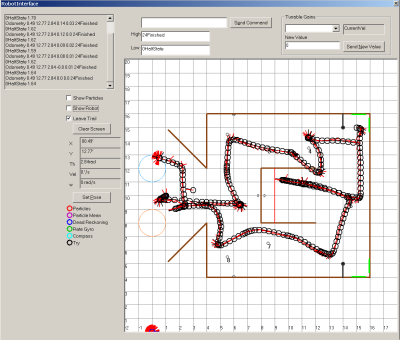

Robot Interface

A graphical user interface programmed in Visual Basic was used to display the robot's current position, path, and current high and low level states. A list box and control buttons allowed us to view and modify all gains and states, and several other settings wirelessly while the robot was on the course.