Computer Vision Implementation, Gesture Recognition

Alex Brinkman, Matt Frisbie

Ball Tracking and Command Chaining

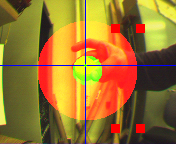

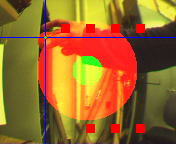

This idea is our simplest form of commanding the robot. The camera has a deadzone in the center of the camera frame that resets the program to accept new commands, shown in green. The vision processing implemented searches for an orange colored ball and tracks the centriod in the camera's pixel coordinate frame. When the ball is brought into the nullzone, a timer begins. For each second the ball is in the deadzone, the program will add another command to look for, up to 5 commands. Commands consist of a reference velocity and turn gain. To command the robot, the ball is brought outside the deadzone and outside another concentric circle, shown in red. The point the centroid of the ball passes outside of the red action circle indicates the command. The reference velocity is the point's projection onto the y-axis and the turn gain is the projection of the point onto the x-axis. The user returns the ball back to the center deadzone and performs the next command. After accepting all commands, the robot will perform the chain of commands in sequence for 1 second per command.

We have shown that we are able to send commands to the robot and implement them. This algorithim shows we can chain commands for later use, and do so with a variable number of commands. Now that we are more familiar with the system and commands, we now wish to find a more interesting implementation of computer vision.

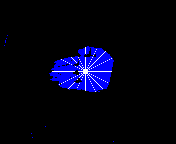

Hand Gesture Recognition Using Discrete Polarization

We decided hand gesture recognition would be an intersting implementation of this vision processing and provide sufficient complexity to the project. To recognize hand gestures, the program begins by thresholding the hand from the background. The Centroid of the hand is found and polar rays are drawn from the centroid to the edge of the hand. The purpose of the polar rays from the centroid is to gather a discrete number of length measurements to the edge of the hand. For a finite number of gestures, statistical data was recorded and analyzed to find average lengths and variances of each gesture. The real-time hand is analyzed and compared to these pre-learned gestures and if the match is sufficiently close, the gesture is recognized by the robot.

This aproach required a lot of data crunching and trial and error to come up with a methodology to recognize these gestures. In the end, our evaluation is that is method of gesture recognition is too limited for general use. The size and shape of the hand is user specific so any such system would have to be fine-tuned to the user. The recognition is sensitive to visual noise and and the distance of the hand to camera. We believe the relatively low pixel resolution hinders this approach greatly, resulting in small polar ray lengths and adding high variance to the gesture matching. A more creative gesture recognition method is sought to work within the limitations of this robotic system.

Finger Recognition

This approach is rather simple yet works well within this system. As before, the hand is thresholded and its centroid found. A circle is overlayed over the hand, blocking out a large portion of the palm. The connected parts algorithim is run again, now searching for disconnected fingertips. We count the number of fingertips found, indicating the hand with outstretched or pointing fingers. If only one finger tip is found, we calculate the angle the hand is pointing in the camera frame and can perform any number of commands based on that information as we did in program 1.